Attention, memory, imagination, inference, continual learning are cognitive functions of the human brain that are at the forefront of AI research.

The brain has always been considered the main inspiration for the field of artificial intelligence(AI). For many AI researchers, the ultimate goal of AI is to emulate the capabilities of the brain. That seems like a nice statement but its an incredibly daunting task considering that neuroscientist are still struggling trying to understand the cognitive mechanism that power the magic of our brains. Despite the challenges, more regularly we are seeing AI research and implementation algorithms that are inspired by specific cognition mechanisms in the human brain and that have been producing incredibly promising results. In 2017, the DeepMind team published a paper about neuroscience-inspired AI that summarizes the circle of influence between AI and neuroscience research.

You might be wondering what’s so new about this topic? Everyone knows that most foundational concepts in AI such as neural networks have been inspired by the architecture of the human brain. However, beyond that high level statement, the relationship between the popular AI/deep learning models we used everyday and neuroscience research is not so obvious. Let’s quickly review some of the brain processes that have a footprint in the newest generation of deep learning methods.

Attention

Attention is one of those magical capabilities of the human brain that we don’t understand very well. What brain mechanisms allow us to focus on a specific task and ignore the rest of the environment? Attentional mechanisms have become a recent source of inspiration in deep learning models such as convolutional neural networks(CNNs) or deep generative models. For instance, modern CNN models have been able to get a schematic representation of the input and ignore irrelevant information improving their ability of classifying objects in a picture.

Episodic Memory

When you remember autobiographical events such as events or places we are using a brain function known as episodic memory. This mechanism is most often associated with circuits in the medial temporal lobe, prominently including the hippocampus. Recently, AI researchers have try to incorporate methods inspired by episodic memory into reinforcement learning(RL) algorithms to episodic control. These networks store specific experiences (e.g., actions and reward outcomes associated with particular Atari game screens) and select new actions based on the similarity between the current situation input and the previous events stored in memory, taking the reward associated with those previous events into account.

Continual Learning

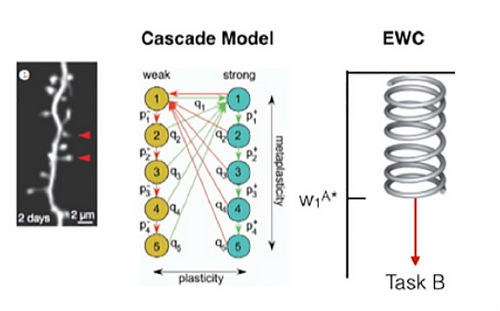

As humans we have the ability to learn new tasks without forgetting previous knowledge. Neural networks, in contrast suffer from what is known as the problem of catastrophic forgetting. This occurs, for instance, as the neural network parameters shift toward the optimal state for performing the second of two successive tasks, overwriting the configuration that allowed them to perform the first.

One of the recent deep learning techniques inspired by the field of continual learning is known as ‘‘elastic’’ weight consolidation (EWC) . This new method acts by slowing down learning in a subset of network weights identified as important to previous tasks, thereby anchoring these parameters to previously found solutions. This allows multiple tasks to be learned without an increase in network capacity, with weights shared efficiently between tasks with related structure. In this way, the EWC algorithm allows deep RL networks to support continual learning at large scale.

Imagination and Planning

One of my favorite definitions of consciousness is related to the ability of humans( and other species) to forecast and think about the future. Most deep learning systems remain operate in incredibly reactive modes that makes it impossible to plan for longer term outcomes. New areas of AI research have focused on simulation-based planning applied to deep generative models. In particular, recent work has introduced new architectures that have the capacity to generate temporally consistent sequences of generated samples that reflect the geometric layout of newly experienced realistic environments, providing a parallel to the function of the hippocampus in binding together multiple components to create an imagined experience that is spatially and temporally coherent.

Inference

Human cognition is notorious for its ability to learn new concepts by drawing inspiration from previous knowledge through inductive inferences. Contrary to that, deep learning systems rely on massive amounts of training data to master the simplest of tasks. Recent work in structured probabilistic methods and deep generative models have started to incorporate brain-inspired inference mechanisms in AI programs. The classes of models can make inferences about a new concept despite a poverty of data and generate new samples from a single example concept, The rapidly growing field of meta-learning is another AI area of research inspired by the inference abilities of the human brain.

Article: Five Functions of the Brain That are Inspiring AI Research