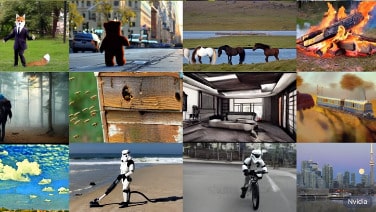

Nvidia turns Stable Diffusion into a text-to-video model, generates high-resolution video, and shows how the model can be personalized.

Nvidia’s generative AI model is based on diffusion models and adds a temporal dimension that enables temporal-aligned image synthesis over multiple frames. The team trains a video model to generate several minutes of video of car rides at a resolution of 512 x 1,024 pixels, reaching SOTA in most benchmarks.

In addition to this demonstration, which is particularly relevant to autonomous driving research, the researchers show how an existing Stable Diffusion model can be transformed into a video model.

Full aticle: Nvidia shows text-to-video for Stable Diffusion