This year, over half the world’s population will head to the ballot box. At a time when, in many nations, democracy is already under acute pressure, ensuring these elections are free and fair is of paramount importance.

In the context of this political instability, deepfakes have entered the stage – and this year they are poised to play a larger role than ever before.

Governments, either through a lack of ability or will, have failed to sufficiently defend democracy against deepfakes. However, where the public sector fails, there’s an opportunity for the private to step in – both to safeguard democracy and reap financial rewards in the process.

Biometric firms should be leading the way in providing the next generation of election securing technology. The first step towards this needs to be reassuring regulators on the privacy of biometric data.

Global elections have become ground zero for a geopolitical struggle as Russia, China, and Iran all try to exert their influence on democratic votes. At the Munich security conference this year, FBI Director Christopher Wray sounded the alarm on deepfakes and how elections are at an unprecedented risk of interference.

The recent US primaries were the canary in the coalmine. The FT reported how the New Hampshire attorney-general investigated an “artificially generated” voice of President Joe Biden that robocalled voters, encouraging them to stay home and not vote in the state’s presidential primary.

In Slovakia, a deepfake of a pro-NATO candidate emerged, where he apparently boasted about rigging the upcoming election. He went on to lose to a candidate who supported closer ties to Putin (CNN).

However, grave threats also represent significant opportunities for bold entrepreneurs to step into the breach. Safeguarding elections is both a civic responsibility and a lucrative market. There is space here for a pioneering biometric tech startup to shore up election security in this crucial year.

So, what does a biometric tech firm need to do to carve out this market as theirs?

This publication has rightly reported on the emerging use of biometric tech in elections in Papua New Guinea and Kurdistan – but no country with a large population is yet to properly integrate biometrics into their electoral processes. This is because of very valid concerns over privacy.

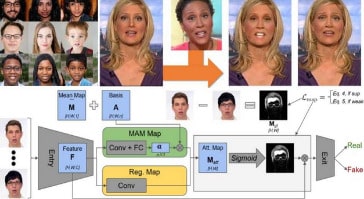

Deepfakes, no matter how real they appear to the human eye, contain flaws at the microscopic level. We struggle to pick them out, but these inconsistent pixels and vocal fluctuations can be registered by biometric technologies. The tech will match the apparent representation against genuine stored biometric data and then verify a recording.

There are variations of this process, but at its core, this is how biometric-based election security would work. The obvious concern this raises is over the security of the stored biometric data – any company trying to stake a claim to this market will have to be able to guarantee its privacy.

Firstly, secure data handling practices need to be updated and followed religiously. This involves standard procedures of anonymization and encryption, but also integrating new technologies.

AI can be used in databases to detect unauthorized alterations and tampering. It can also be used to power encryption by managing the generation and rotation of encryption keys. Rigorous role-based access should be enforced, and the principle of least privilege applied at all levels.

Secondly, biometric templates, like a voter’s facial geometry, are the assemblages of this data – and they are also crucial vulnerabilities as they can be used in fraud and manipulation to a greater extent than the raw data.

Cryptographic hashing can be used to avoid storing the raw biometric data and the templates together. ‘Salts’ are also necessary and can be deployed to augment the hashing process and shore up password security.

A third variable that must be controlled by any prospective biometric firm operating in elections is their supply chains. It doesn’t matter how advanced their hashing is if the data has already been compromised by a firmware hack at source.

Third-party vendor privacy assessments must form the basis of procurement decisions, and biometric companies need to work with vendors to implement secure development life cycles that involve regular security assessments, code reviews, and vulnerability testing.

Developing a biometric product that doesn’t just meet these baseline standards but goes beyond them to guarantee voter privacy will be expensive. Entering any highly regulated market is bold; and few are regulated as rigorously as election technology. The first biometric startup to develop tech that neuters deepfakes in an electoral setting will rapidly find itself in global demand. Then the potential rewards of abundance and safeguarding democracy will more than justify the intense scrutiny.

Article: Deepfake threats are a lucrative opportunity – biometric data privacy is the key to unlocking it