Digital harassment is a problem in many corners of the internet, like internet forums, comment sections and game chat. In this article you can play with techniques to automatically detect users that misbehave, preferably as early in the conversation as possible. What you will see is that while neural networks do a better job than simple lists of words, they are also black boxes; one of our goals is to help show how these networks come to their decisions. Also, we apologize in advance for all of the swear words :).

According to a 2016 report, 47% of internet users have experienced online harassment or abuse [1], and 27% of all American internet users self-censor what they say online because they are afraid of being harassed. On a similar note, a survey by The Wikimedia Foundation (the organization behind Wikipedia) showed that 38% of the editors had encountered harassment, and over half them said this lowered their motivation to contribute in the future [2]; a 2018 study found 81% of American respondents wanted companies to address this problem [3]. If we want safe and productive online platforms where users do not chase each other away, something needs to be done.

One solution to this problem might be to use human moderators that read everything and take action if somebody crosses a boundary, but this is not always feasible (nor safe for the mental health of the moderators); popular online games can have the equivalent population of a large city playing at any one time, with hundreds of thousands of conversations taking place simultaneously. And much like a city, these players can be very diverse. At the same time, certain online games are notorious for their toxic communities. According to a survey by League of Legends player Celianna in 2020, 98% of League of Legend players have been ‘flamed’ (been part of an online argument with personal attacks) during a match, and 79% have been harassed afterwards [4]. The following is a conversation that is sadly not untypical for the game:

Z: fukin bot n this team…. so cluelesss gdam

V: u cunt

Z: wow ….u jus let them kill me

V: ARE YOU RETARDED

V: U ULTED INTO 4 PEOPLE

Z: this game is like playign with noobs lol….complete clueless lewl

L: ur shyt noob

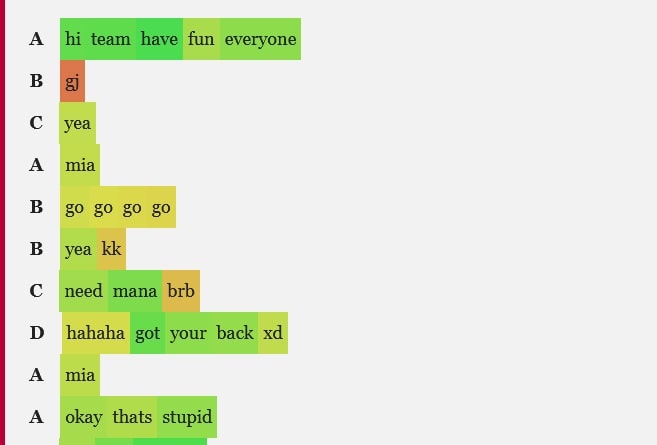

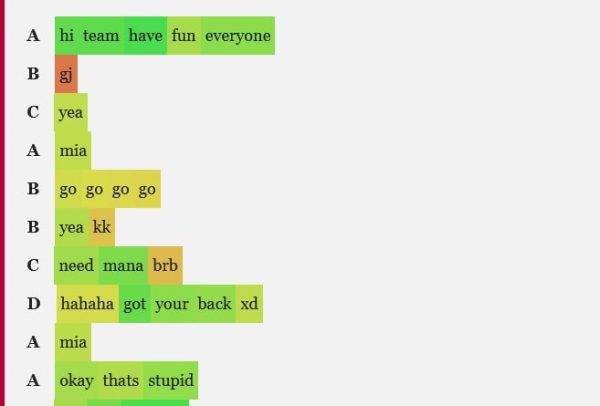

For this article, we therefore use a dataset of conversations from this game and show different techniques to separate ‘toxic’ players from ‘normal’ players automatically. To keep things simple, we selected 10 real conversations between 10 players that contained about 200 utterances: utterance 1 is the first chat message in the game, utterance 200 one of the last (most of the conversations were a few messages longer than 200, we truncated them to keep the conversations uniform). In each of the 10 conversations, exactly 1 of the 10 persons misbehaves. The goal is to build a system that can pinpoint this 1 player, preferably quickly and early in the conversation; if we find the toxic player by utterance 200, the damage is already done.

Can’t we just use a list of bad words?

A first approach for an automated detector might be to use a simple list of swear words and insults like ‘fuck’, ‘suck’, ‘noob’ and ‘fag’, and label a player as toxic if they use a word from the list more often than a particular threshold. Below, you can slide through ten example conversations simultaneously. Normal players are represented by green faces, toxic players by red faces. When our simple system marks a player as toxic, it gets a yellow toxic symbol. These are all the possible options:

| Normal players | Toxic players | |

| System says nothing (yet) |